Use Load Balancers to Provide Port Based Destination NAT¶

Summary¶

Rumble Cloud supports a few different types of address translation. The most common use case is to map a public IP (referred to as a floating IP) to a private IP address attached to a virtual machine (VM) on an internal network.

A floating IP can also be mapped to a load balancer. Typically a load balancer is used to balance traffic across multiple VMs for scaling a service and providing redundancy. A load balancer can also be used to provide Port Based Destination NAT if configured properly.

To setup up port-based destination NAT, create a load balancer with multiple listeners listening on different ports. Associate those listeners with pools that have a single member in them. In this way the port will be specifically dedicated to that server.

Note

Using this strategy you can provide load balancing and port based destination NAT on the same IP address.

The details section below shows step-by-step how to do this from the Rumble Cloud console web interface. As this can get complex, you can also use Infrastructure-as-Code via Terraform / OpenTofu for these types of cases. A full terraform template is included.

Web interface examples¶

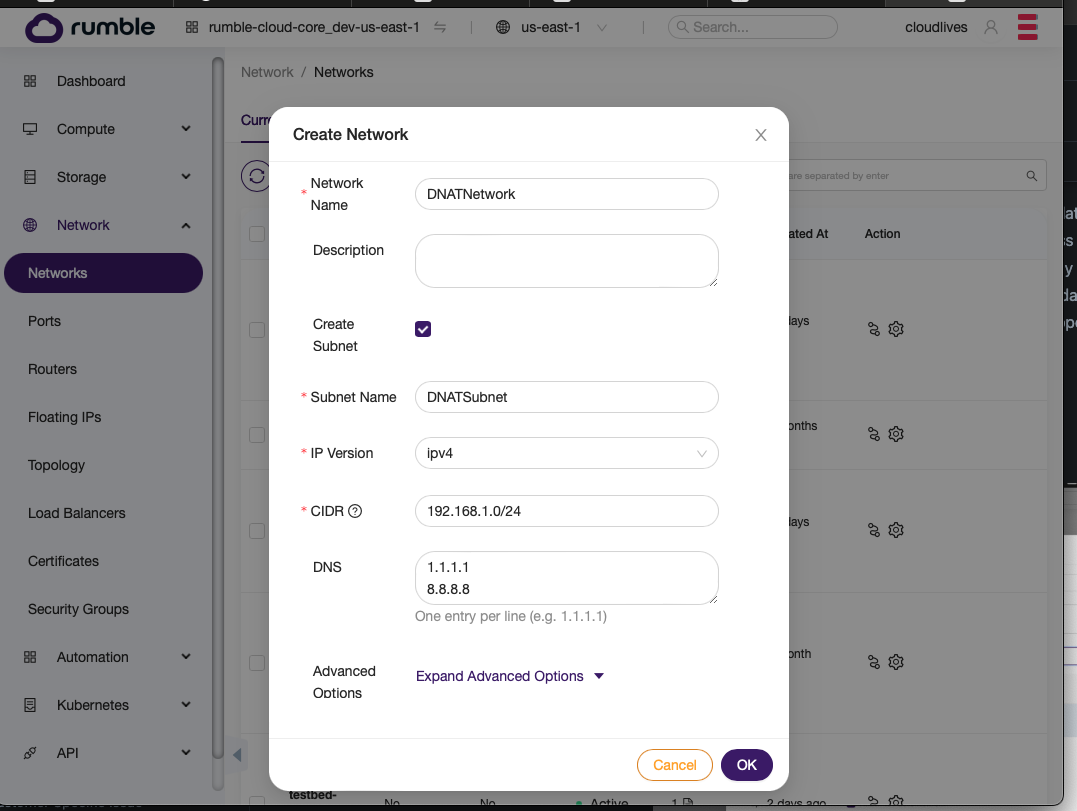

Step 1. Create a network with subnet.¶

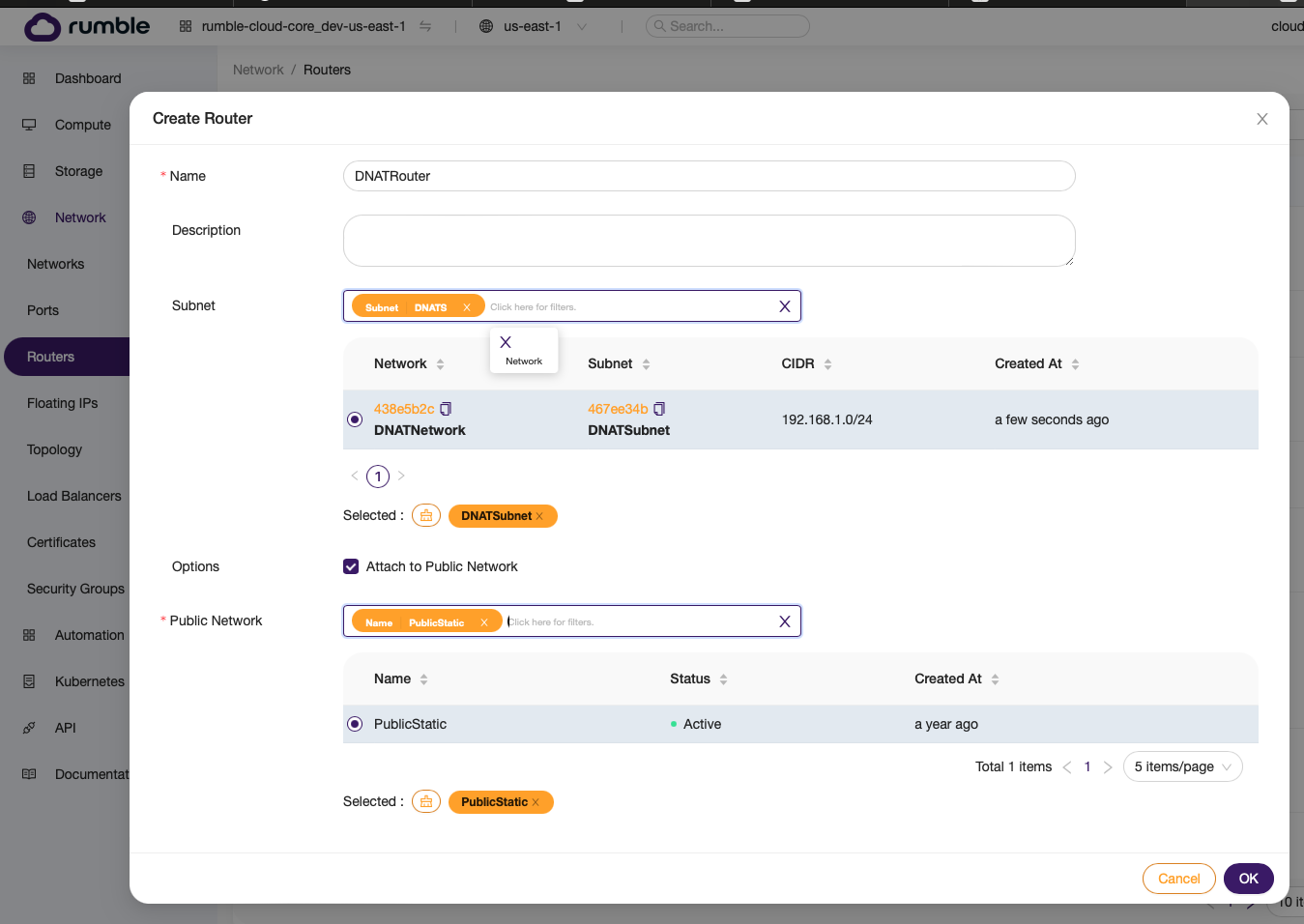

Step 2. Create a router that uses the network.¶

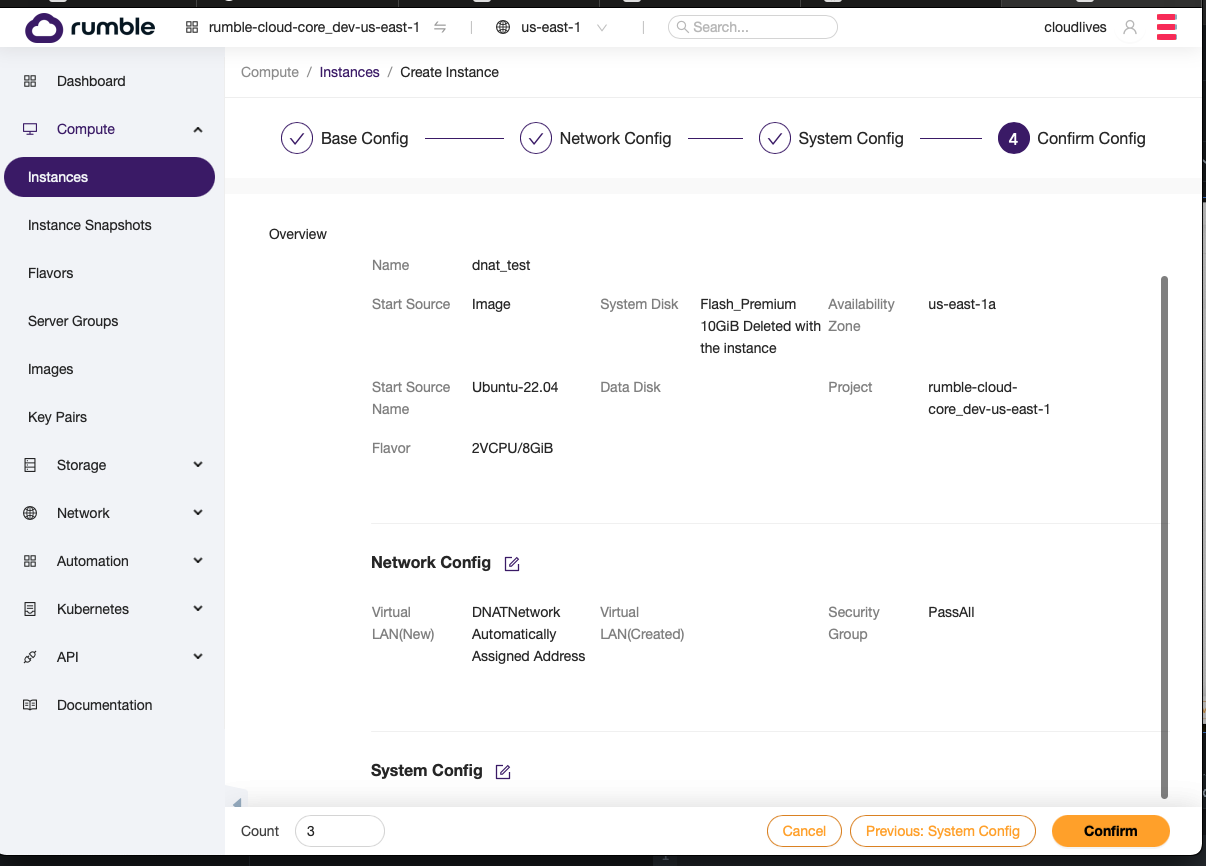

Step 3. Create two or more VM’s on the network.¶

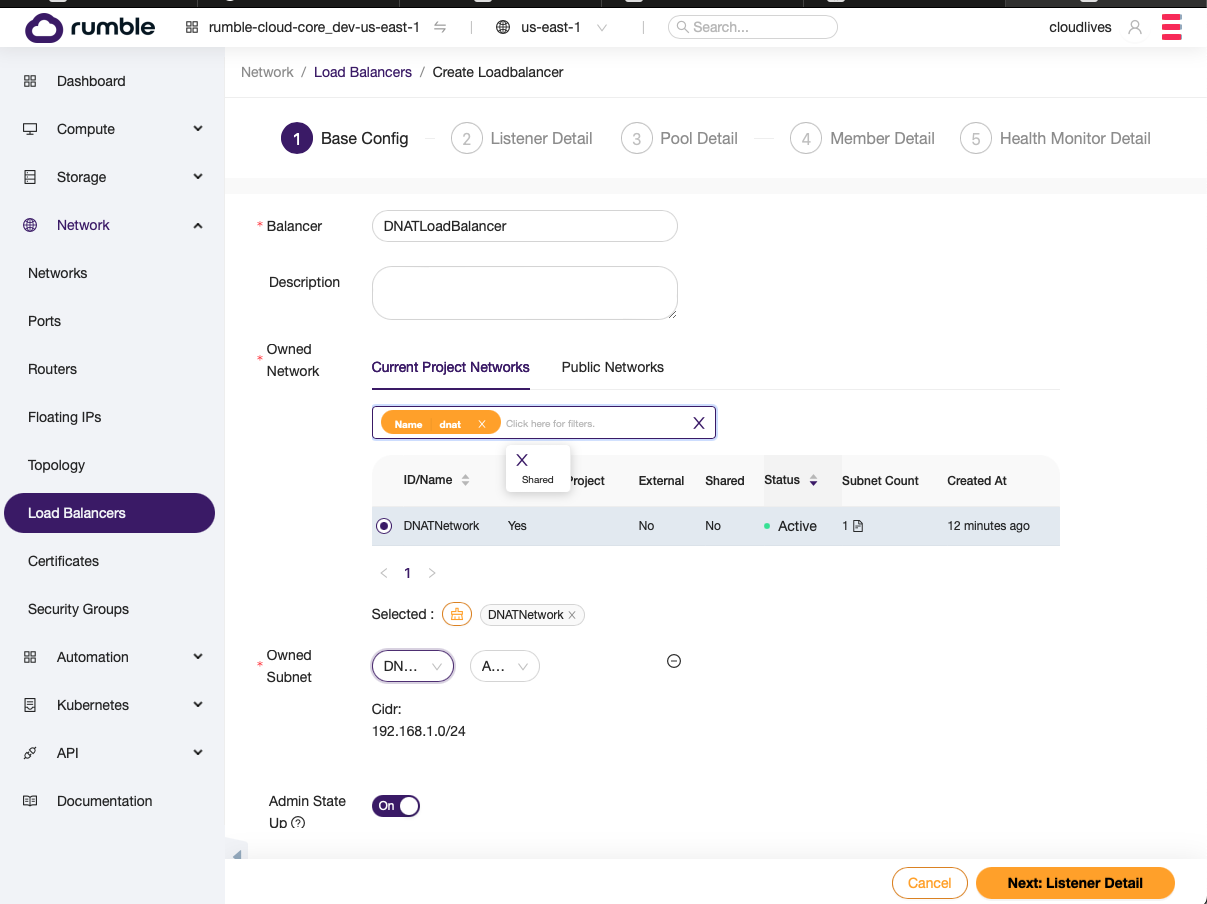

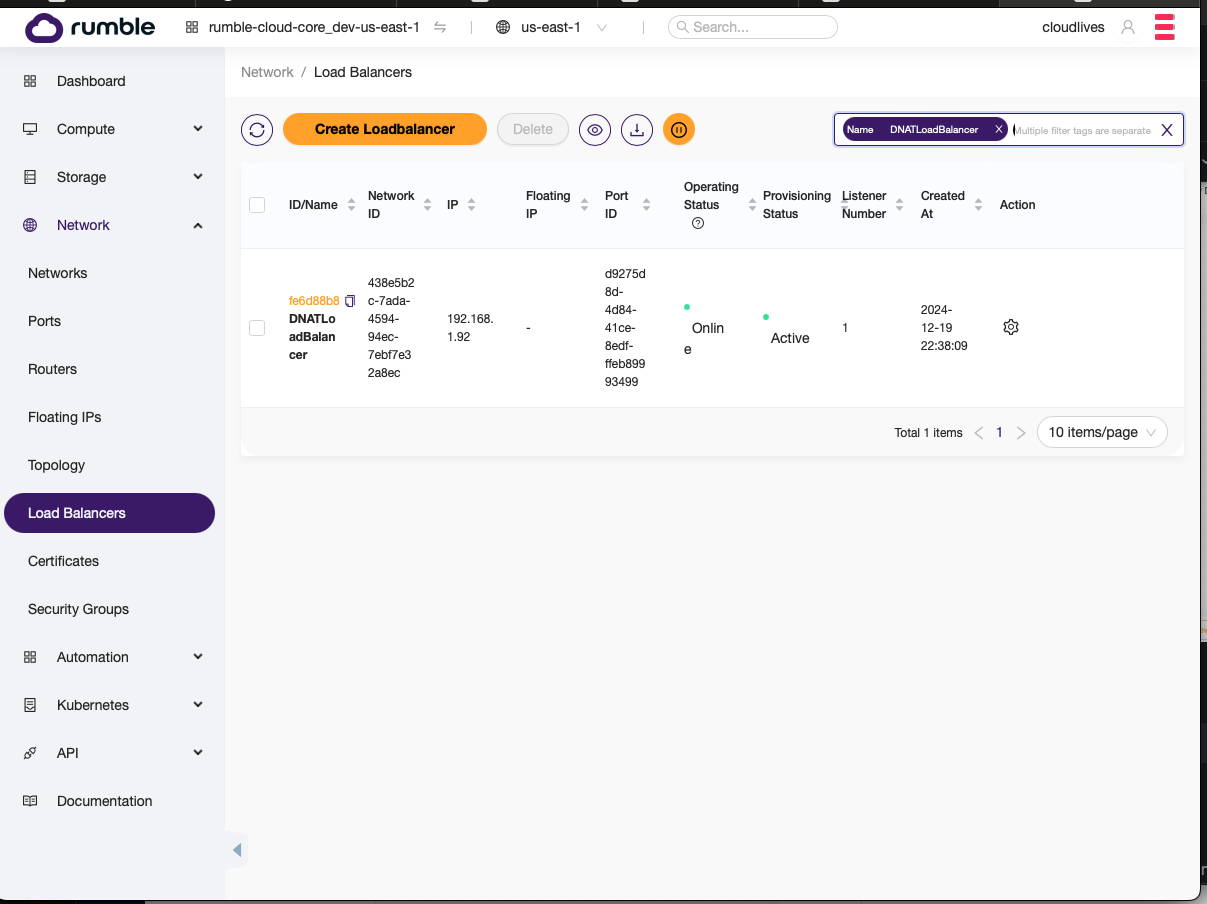

Step 4. Create a load balancer that provides destination NAT for SSH, as well as provides HTTP/HTTPS load balancing.¶

- Create load balancer.

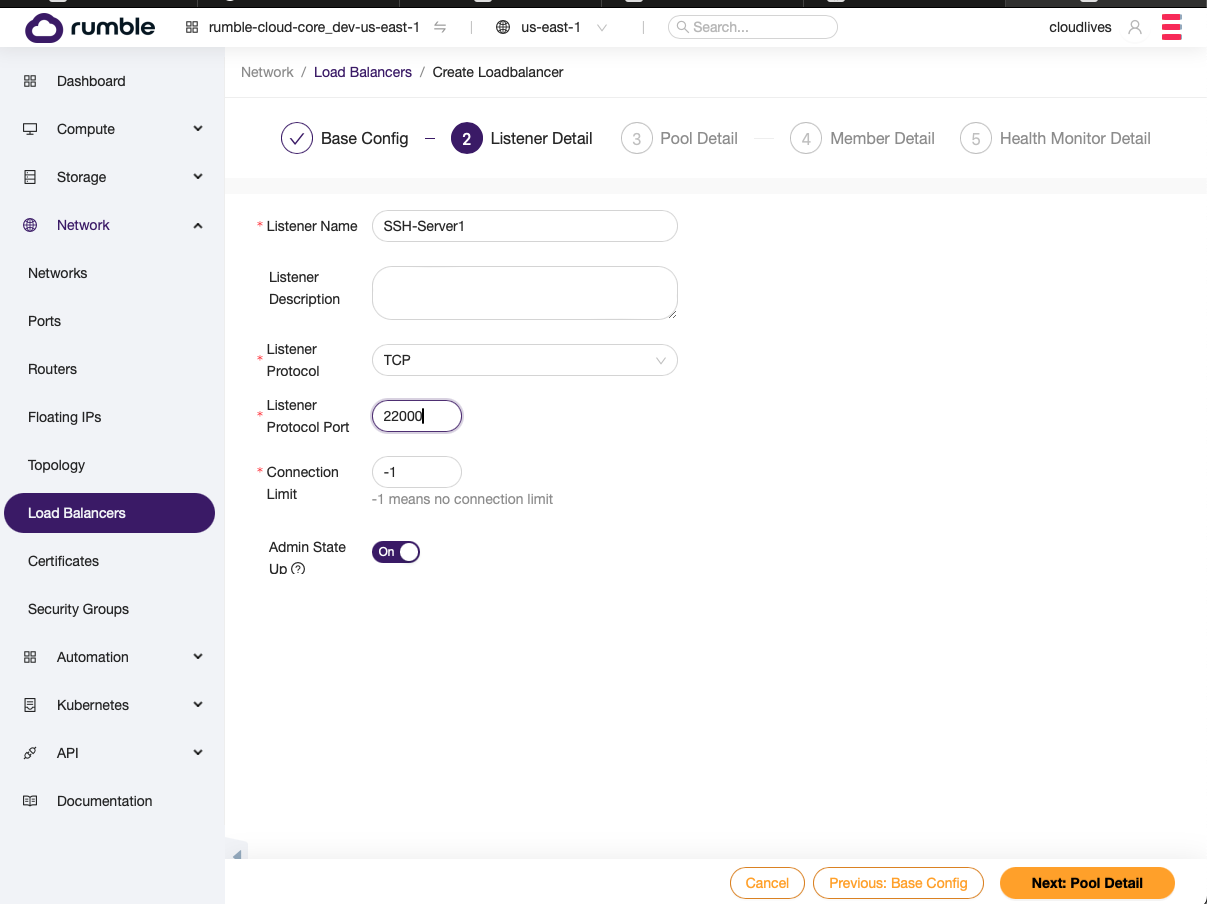

- Setup first listener.

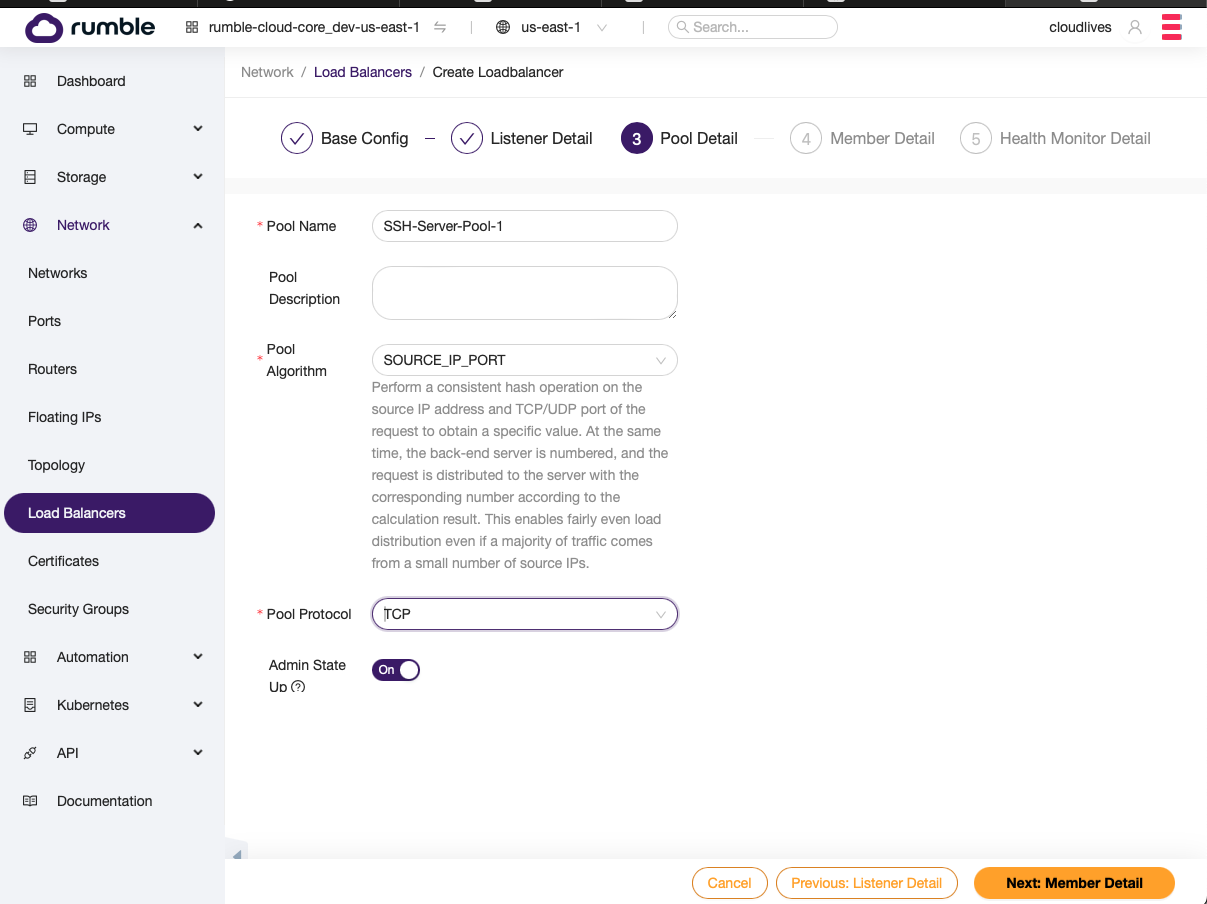

- Setup first pool.

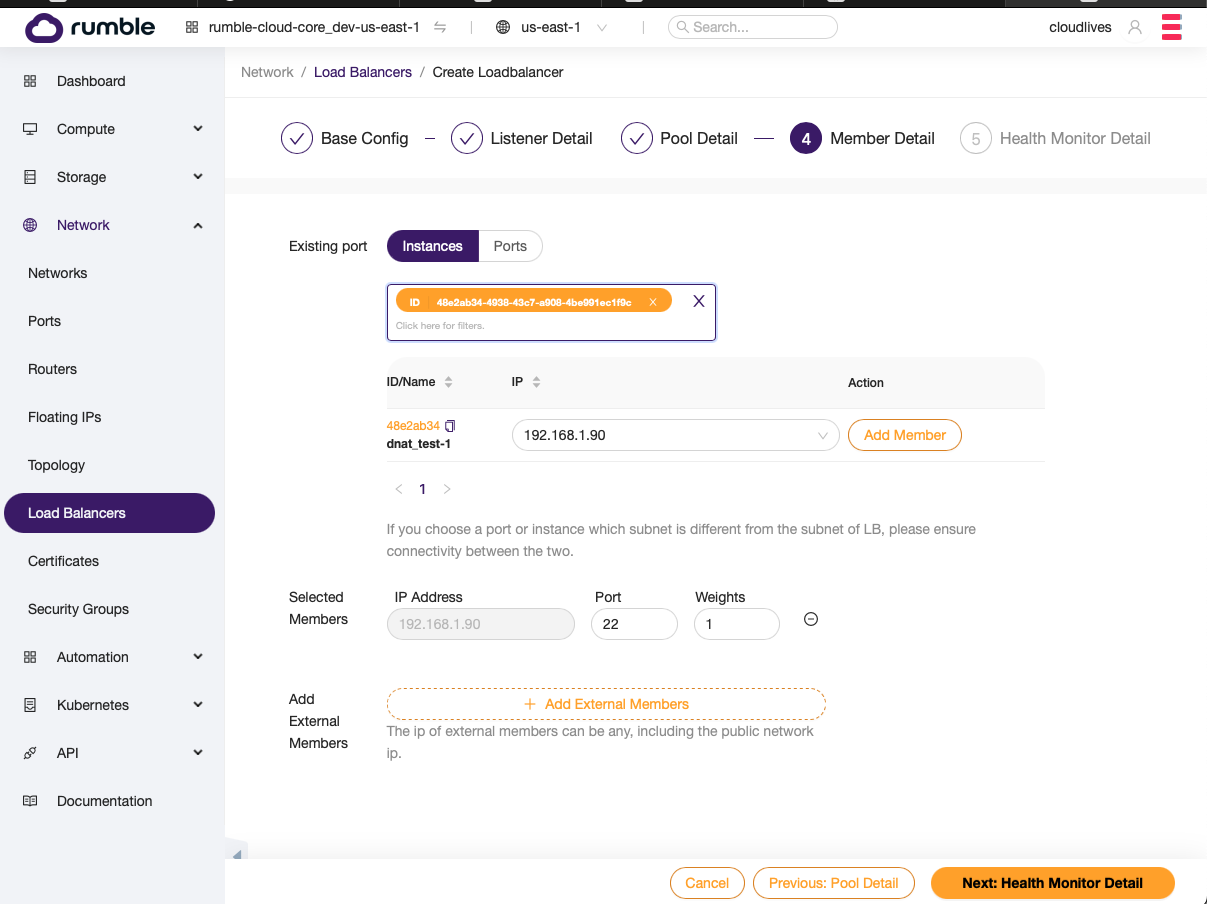

- Add the first server to the pool.

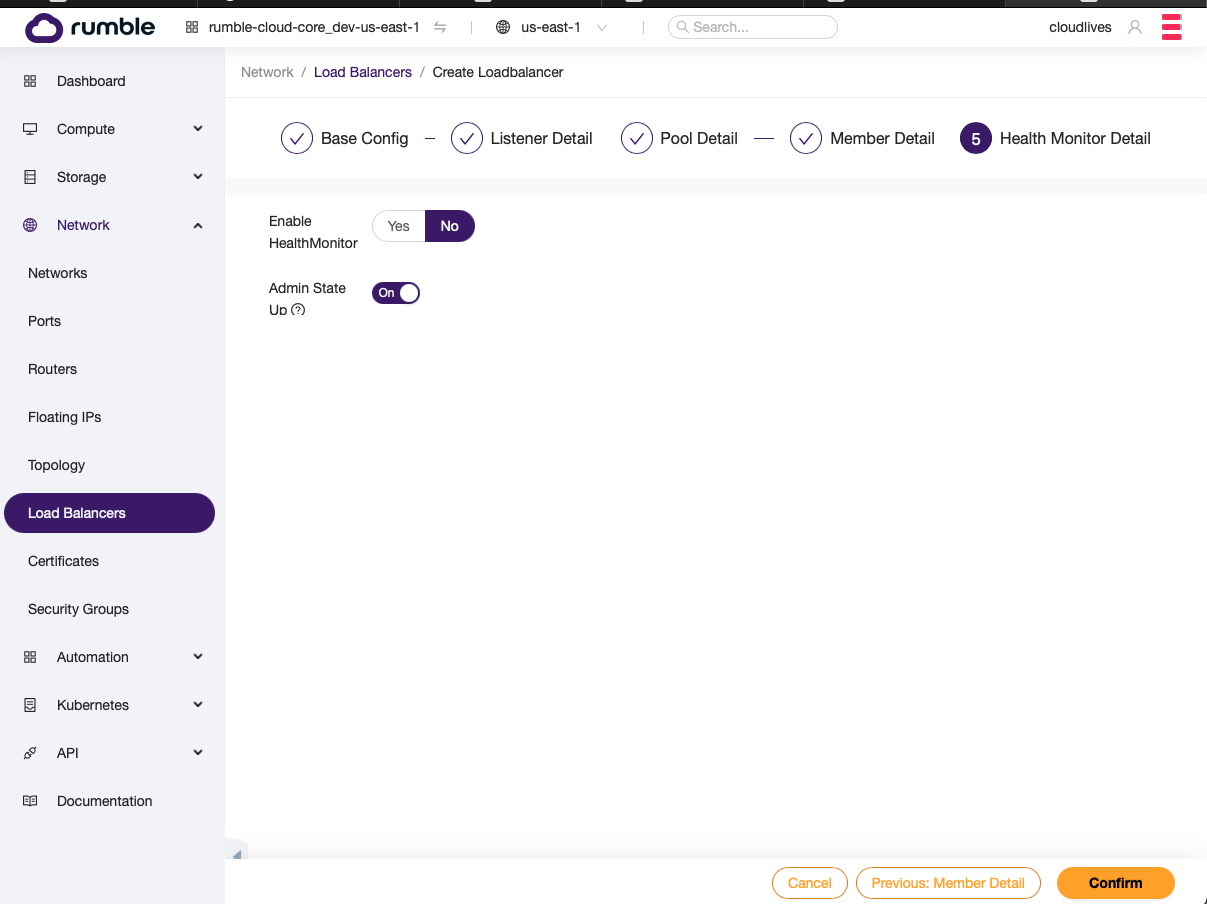

- You can skip the health monitor for any DNAT scenarios since you have exactly one member in the pool. However, if the Confirm button doesn’t work you may have to put valid information in the Health Monitor form first, to allow the Confirm button to work.

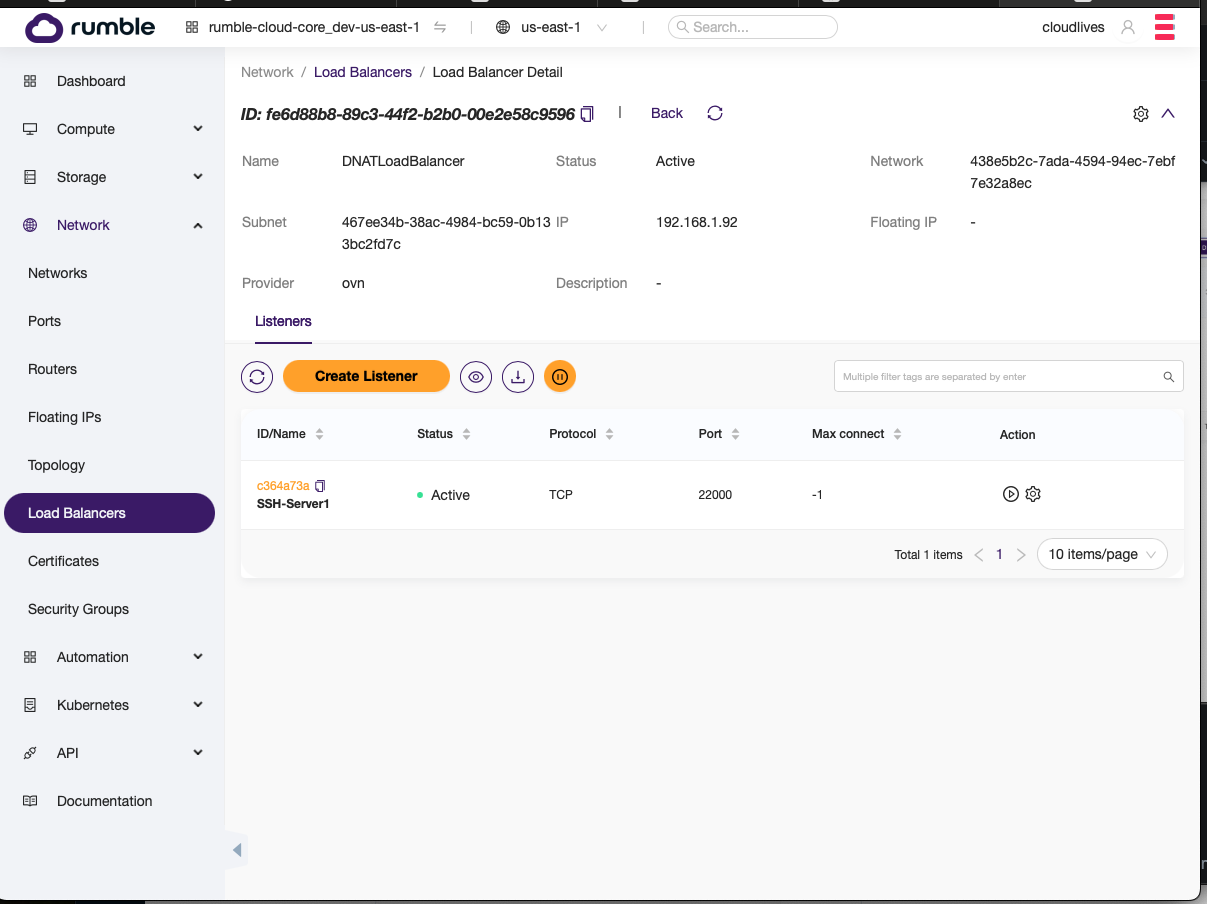

Step 5. After creating the load balancer should with a single listener for the first server, find the load balancer. Click on it to display its details, and to add more listeners.¶

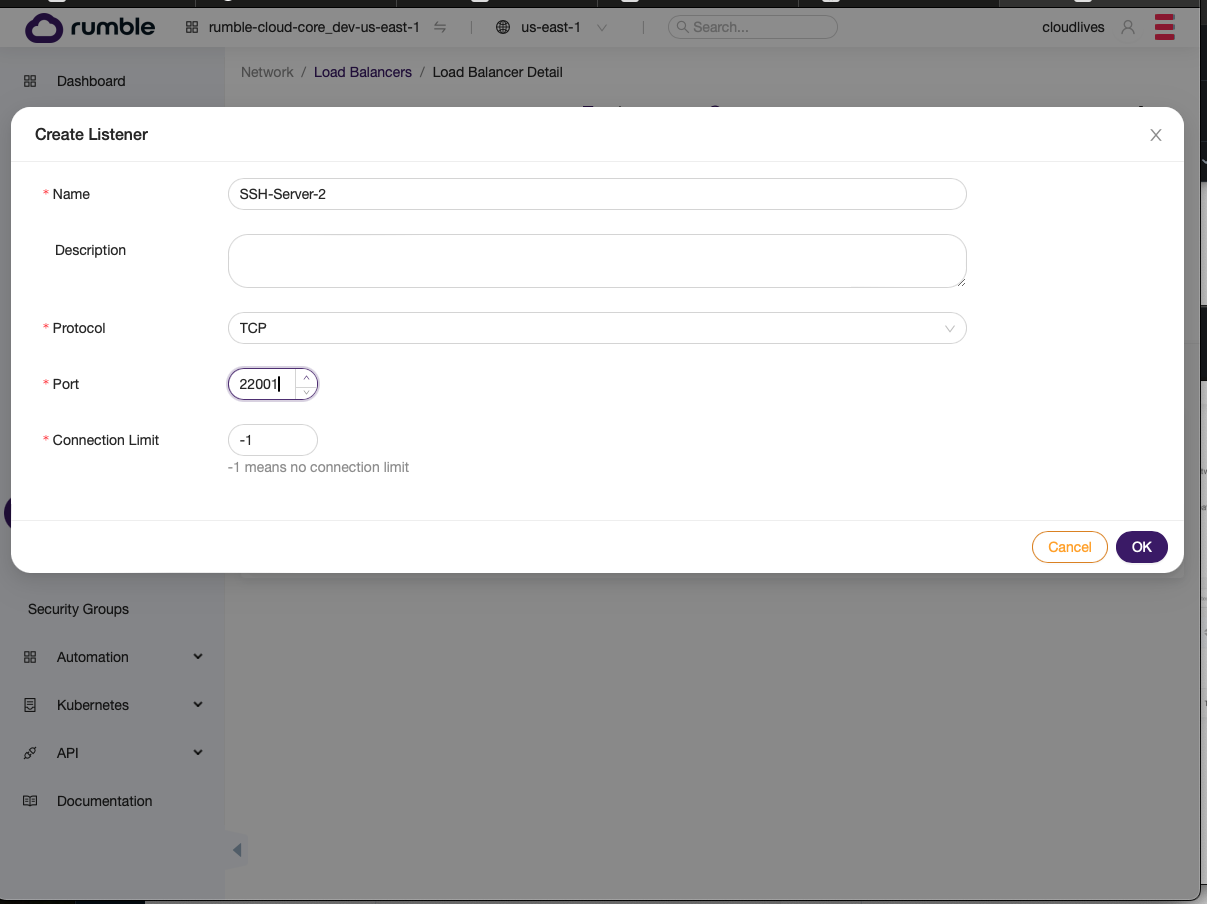

- Select create listener.

- Fill in the next port for the new listener.

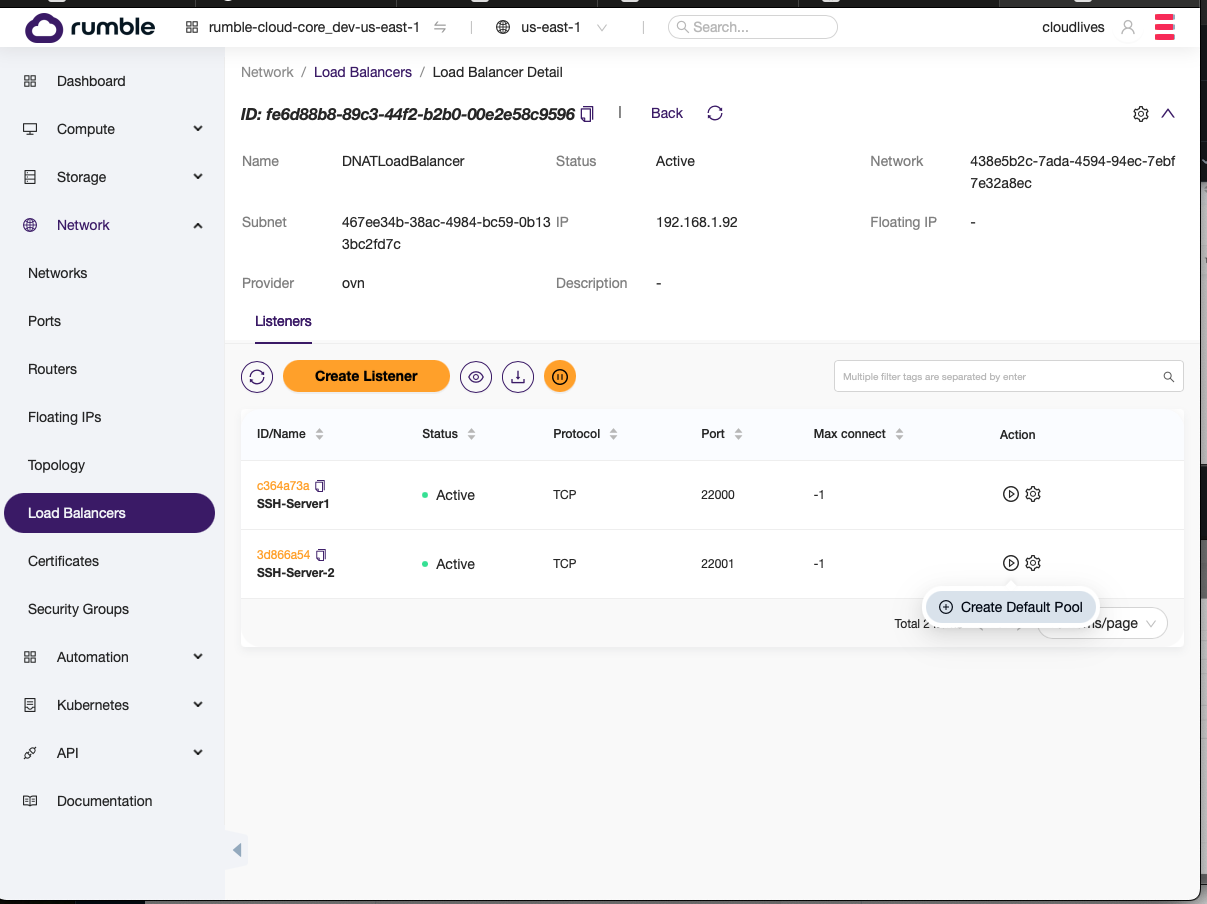

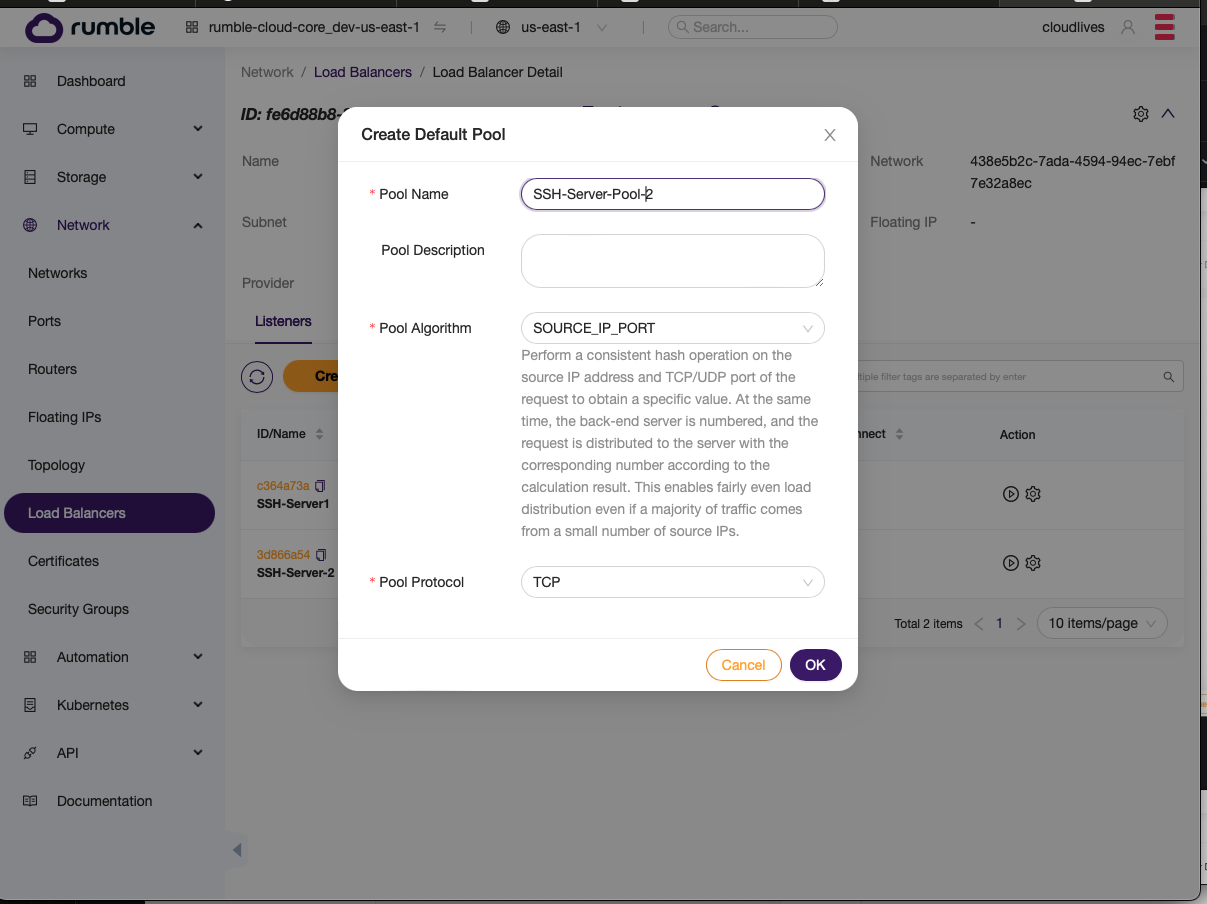

- Once, the listener is created, click on the first icon next to the listener and select

Create Default Pool.

- Populate the information for the pool.

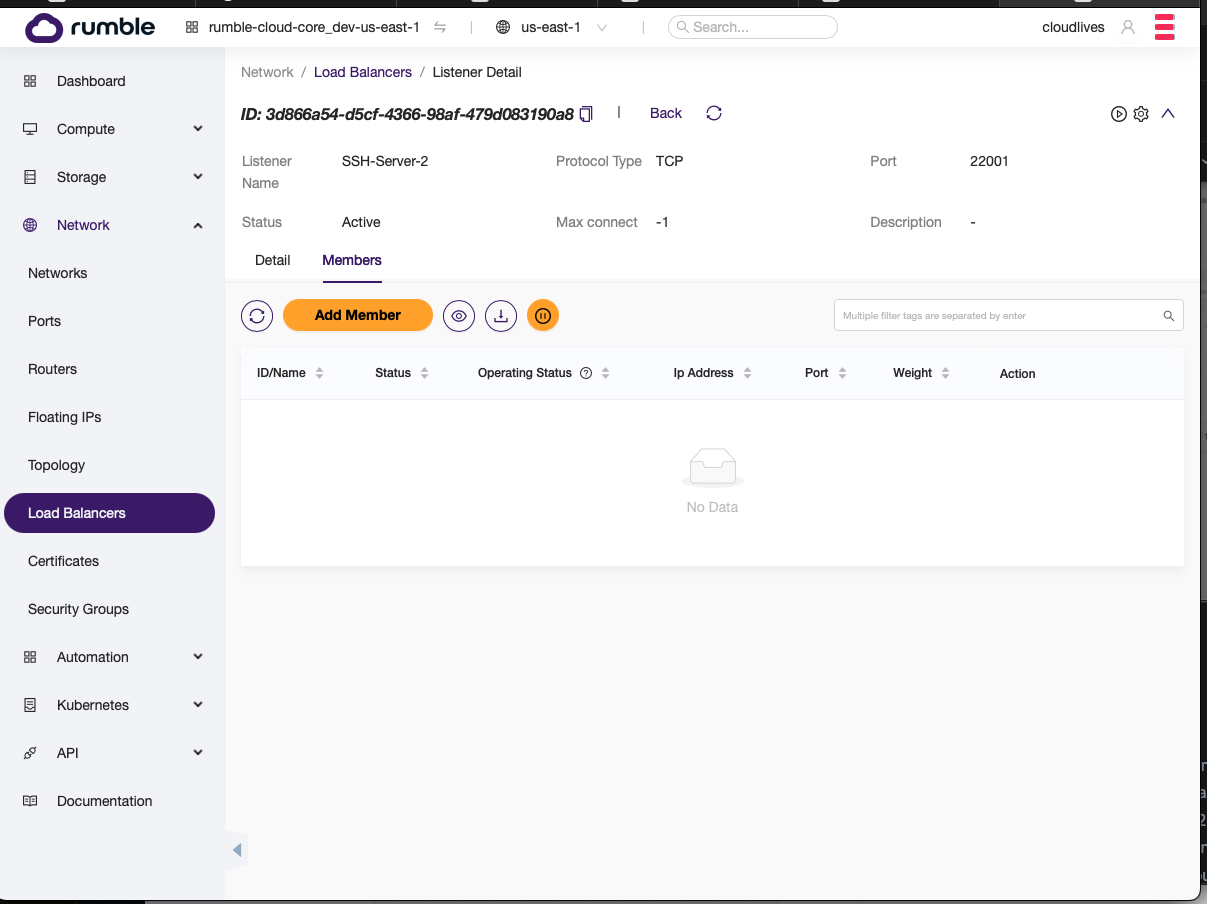

- Select the listener and go to the Members tab.

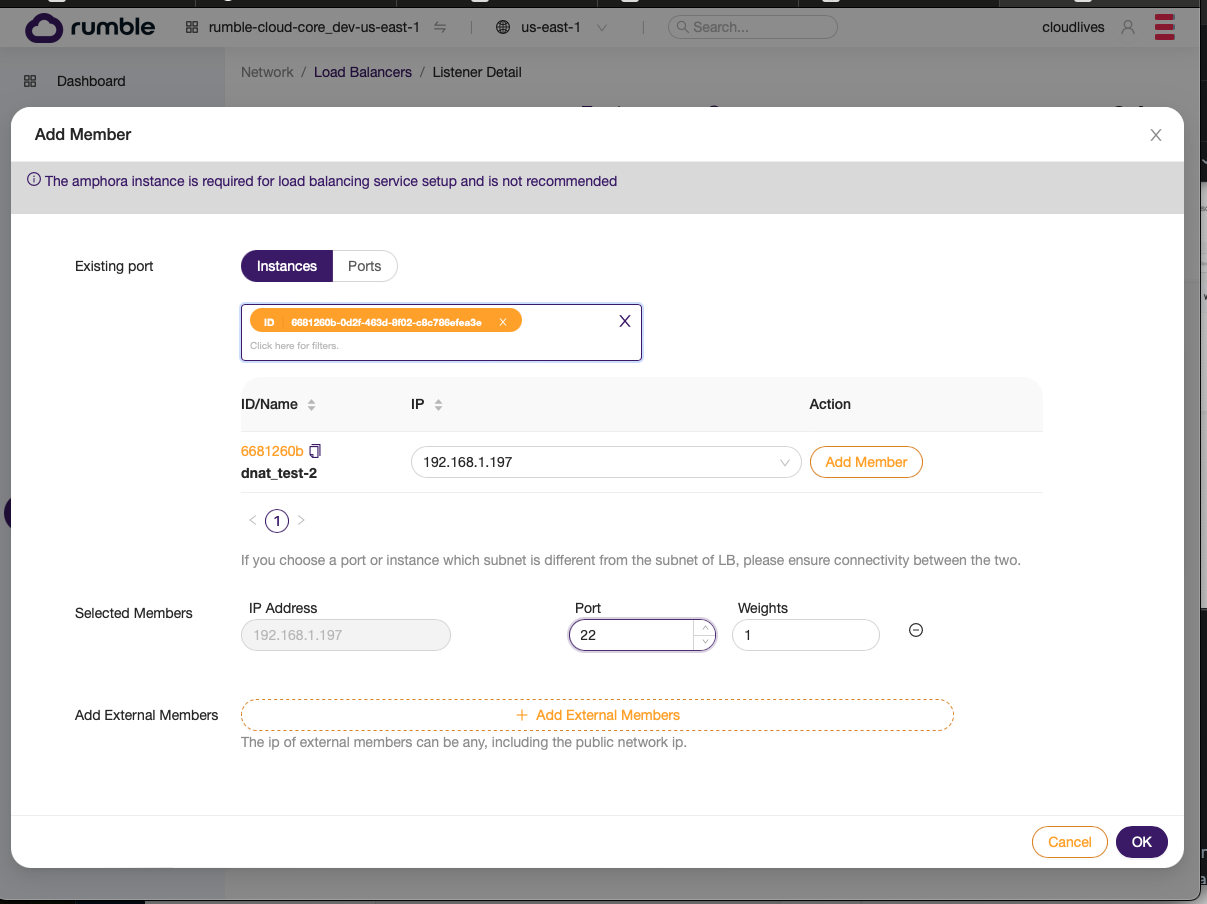

- Select Add members and populate the second SSH host.

- Repeat the above steps to add more single member listeners and pools on other ports OR add full fledged pools with multiple members for load balanced services.

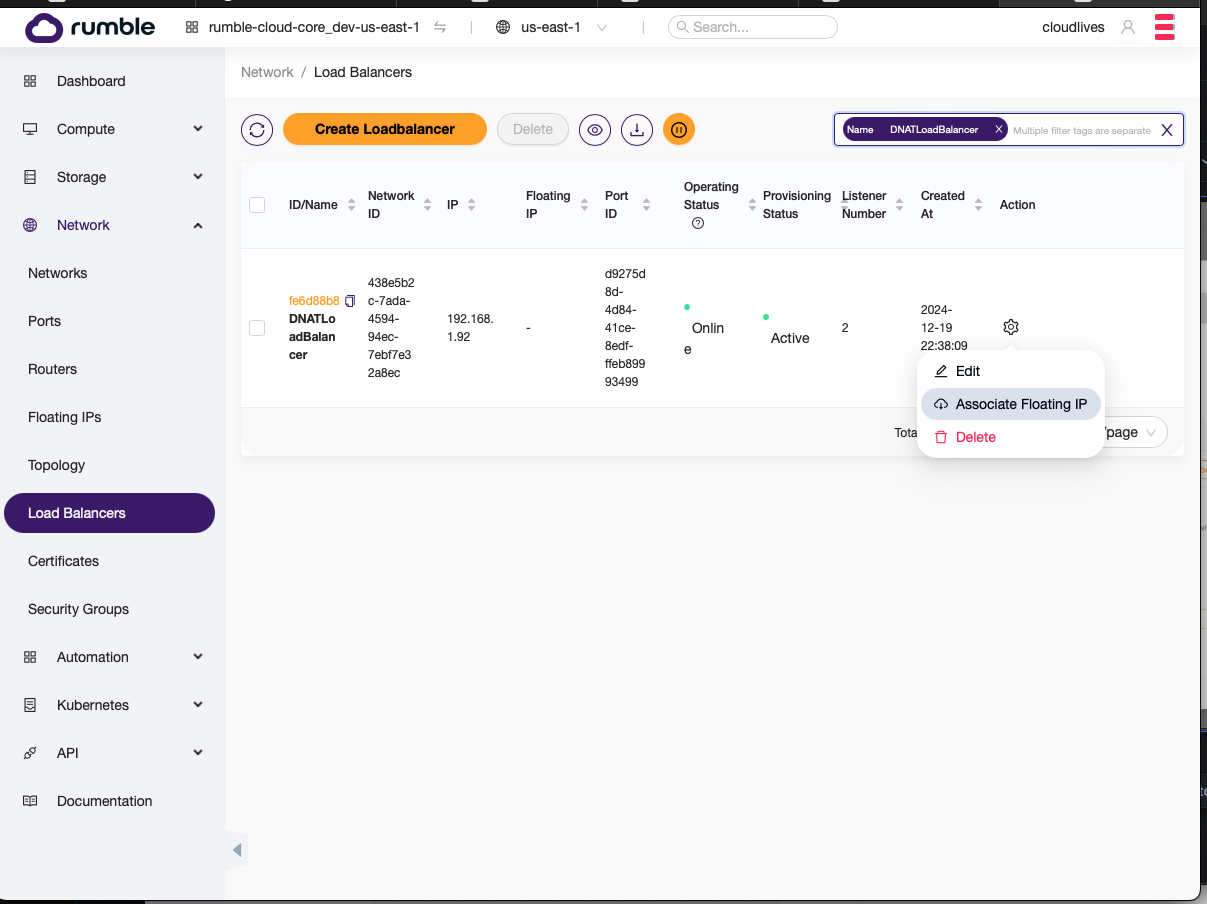

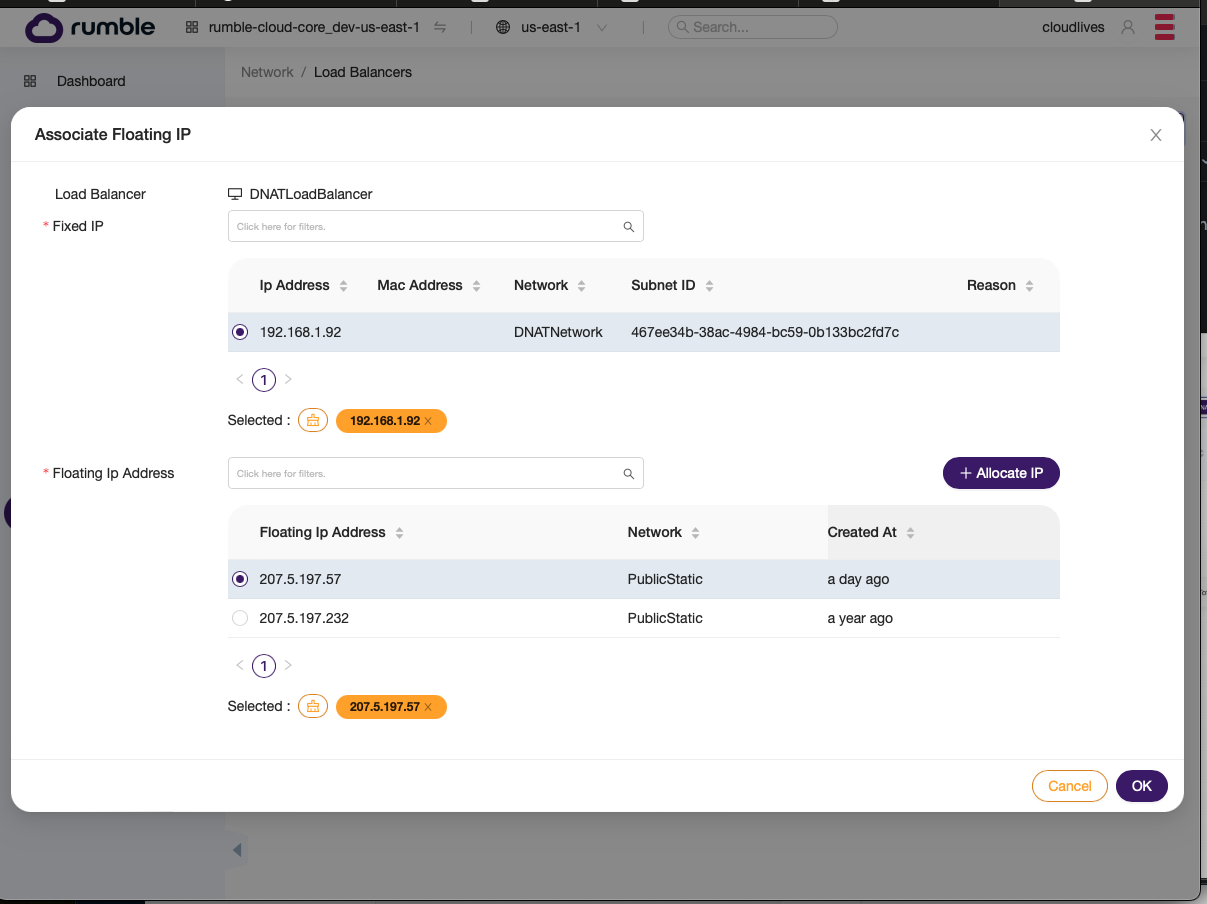

Step 6. Assign a public IP (i.e, floating IP) to the load balancer.¶

- First find the load balancer and select

Associate Floating IP.

- Pick a public IP and assign it.

Terraform examples¶

Download the sample files for creating a load balancer that provides a load balance HTTP and HTTPS service on ports 80 and 443, but using the same load balancer (and therefore same IP) is also able to provide SSH access to all the VM in the application VM pool with SSH access starting at port 22000 and increasing by 1 for each VM in the pool. Note that the key difference is when setting a load balanced service, you put multiple servers in the same pool. In the case of doing port based natting, you put one server in the pool to accomplish the same thing.

Attached is a full Zip archive. The files creates three Ubuntu VM’s with a load balancer in front that provides load balanced HTTP and HTTPS on ports 80 and 443, and that also provides SSH access to the 3 VM’s via ports 22000, 22001, 22002

Here is the key terraform configuration concerned with creating the load balancer:

resource "openstack_lb_loadbalancer_v2" "loadbalancer_app" {

name = "${var.system_name}-loadbalancer-app"

vip_network_id = openstack_networking_network_v2.network_internal_app.id

}

# HTTP Setup

resource "openstack_lb_listener_v2" "listener_http_app" {

name = "${var.system_name}-app_listener_http"

protocol = "TCP"

protocol_port = 80

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

default_pool_id = openstack_lb_pool_v2.pool_http_app.id

}

resource "openstack_lb_pool_v2" "pool_http_app" {

name = "${var.system_name}-pool_http_app"

protocol = "TCP"

lb_method = "SOURCE_IP_PORT"

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

}

resource "openstack_lb_member_v2" "member_http_app" {

name = "${var.system_name}-member_http_app-${count.index}"

count = length(openstack_compute_instance_v2.server_app)

pool_id = openstack_lb_pool_v2.pool_http_app.id

address = openstack_compute_instance_v2.server_app.*.access_ip_v4[count.index]

protocol_port = 80

}

resource "openstack_lb_monitor_v2" "monitor_http_app" {

name = "${var.system_name}-monitor_http_app"

pool_id = openstack_lb_pool_v2.pool_http_app.id

type = "TCP"

delay = 10

timeout = 5

max_retries = 3

}

# HTTPS Setup

resource "openstack_lb_listener_v2" "listener_https_app" {

name = "${var.system_name}-listener_https_app"

protocol = "TCP"

protocol_port = 443

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

default_pool_id = openstack_lb_pool_v2.pool_https_app.id

}

resource "openstack_lb_pool_v2" "pool_https_app" {

name = "${var.system_name}-pool_https_app"

protocol = "TCP"

lb_method = "SOURCE_IP_PORT"

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

}

resource "openstack_lb_member_v2" "member_https_app" {

name = "${var.system_name}-member_https_app-${count.index}"

count = length(openstack_compute_instance_v2.server_app)

pool_id = openstack_lb_pool_v2.pool_https_app.id

address = openstack_compute_instance_v2.server_app.*.access_ip_v4[count.index]

protocol_port = 443

}

resource "openstack_lb_monitor_v2" "monitor_https_app" {

name = "${var.system_name}-monitor_https_app"

pool_id = openstack_lb_pool_v2.pool_https_app.id

type = "TCP"

delay = 10

timeout = 5

max_retries = 3

}

# SSH ACCESS

resource "openstack_lb_listener_v2" "listener_ssh_app" {

count = length(openstack_compute_instance_v2.server_app)

name = "${var.system_name}-listener_ssh_app-${count.index}"

protocol = "TCP"

protocol_port = 22000 + count.index

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

default_pool_id = openstack_lb_pool_v2.pool_ssh_app.*.id[count.index]

}

resource "openstack_lb_pool_v2" "pool_ssh_app" {

count = length(openstack_compute_instance_v2.server_app)

name = "${var.system_name}-pool_ssh_app-${count.index}"

protocol = "TCP"

lb_method = "SOURCE_IP_PORT"

loadbalancer_id = openstack_lb_loadbalancer_v2.loadbalancer_app.id

}

resource "openstack_lb_member_v2" "member_ssh_app" {

count = length(openstack_compute_instance_v2.server_app)

name = "${var.system_name}-member_ssh_app-${count.index}"

pool_id = openstack_lb_pool_v2.pool_ssh_app.*.id[count.index]

address = openstack_compute_instance_v2.server_app.*.access_ip_v4[count.index]

protocol_port = 22

}

resource "openstack_lb_monitor_v2" "monitor_ssh_app" {

count = length(openstack_compute_instance_v2.server_app)

name = "${var.system_name}-monitor_ssh_app-${count.index}"

pool_id = openstack_lb_pool_v2.pool_ssh_app.*.id[count.index]

type = "TCP"

delay = 10

timeout = 5

max_retries = 3

}